Mandelbulb Terraforming: Ray Tracing Fractals

September, 2017.

Following on from the Swarm Mech Assembly post on my initial foray into rendering fractal based 3D geometry, I’ve finally gotten around to another exploratory session. The focus this time was to try and capture something of the more classical 3D CGI style I remember from the early 90s and as promised, the following is a little further narrative on the process and tools involved

It was while browsing the site of Ryan Geiss (the guy behind the classic MilkDrop music visualizer for Winamp among other things) I stumbled across Gpucaster, a small program of his that “uses your GPU to quickly render highly-detailed, arbitrary 3D isosurfaces (functions) with semi-realistic lighting (5 point light sources with ambient occlusion)”. By his own admission, Gpucaster is something Ryan “threw together under the worst conditions possible” and while very capable of generating some beautiful images, it’s a little underdeveloped in places and somewhat less than inviting to work with.

As is often the case with these things on my part, various work and study commitments at the time meant that after a brief but intriguing evening of cursory investigation, Gpucaster found it’s way onto a neglected list of ‘things to explore in more detail at a later date’.

![ScratchPad #004 [detail]](http://inmosystems.com/wp-content/uploads/scratchPad004thumb-960x710.jpg)

Some time later, while searching for new content to try out with the second Oculus VR dev kit, I came across a ‘demo’ called Foreign Nature by a guy called Julius Horsthuis. The accompanying screenshots left me intrigued as to how something that looked like a traditional ray traced fractal was possibly going to render in real-time at the 75hz frame rate required for the HMD. Somewhat disappointingly, it in fact turned out to be a pre-rendered 360 degree panoramic video, meaning no real 3D depth or positional tracking.

I went ahead and downloaded the 4K video regardless and after floating through its roughly 10 minute duration in the headset was quite surprised at how engaging an experience it turned out to be, even with it’s limitations. Immediately reminded of my earlier excursion with Gpucaster, I was curious to learn more about the tools Julius had used to create the video and that’s when I came across Mandelbulb 3D.

So what is Mandelbulb 3D? Well, according to Mandelbulb.com…

“Mandelbulb 3D is a free software application created for 3D fractal imaging. Developed by Jesse and a group of Fractal Forums contributors, based on Daniel White and Paul Nylander’s Mandelbulb work, MB3D formulates dozens of nonlinear equations into an amazing range of fractal objects. The 3D rendering environment includes lighting, color, specularity, depth-of-field, shadow- and glow- effects; allowing the user fine control over the imaging effects.”

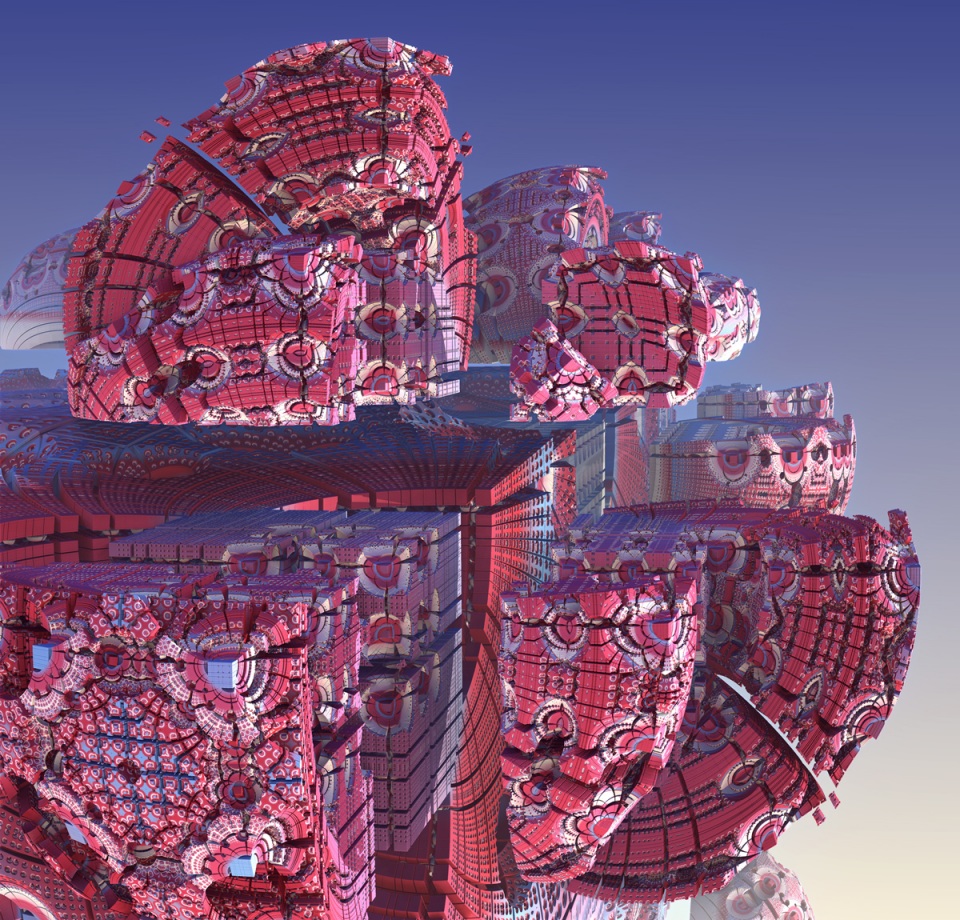

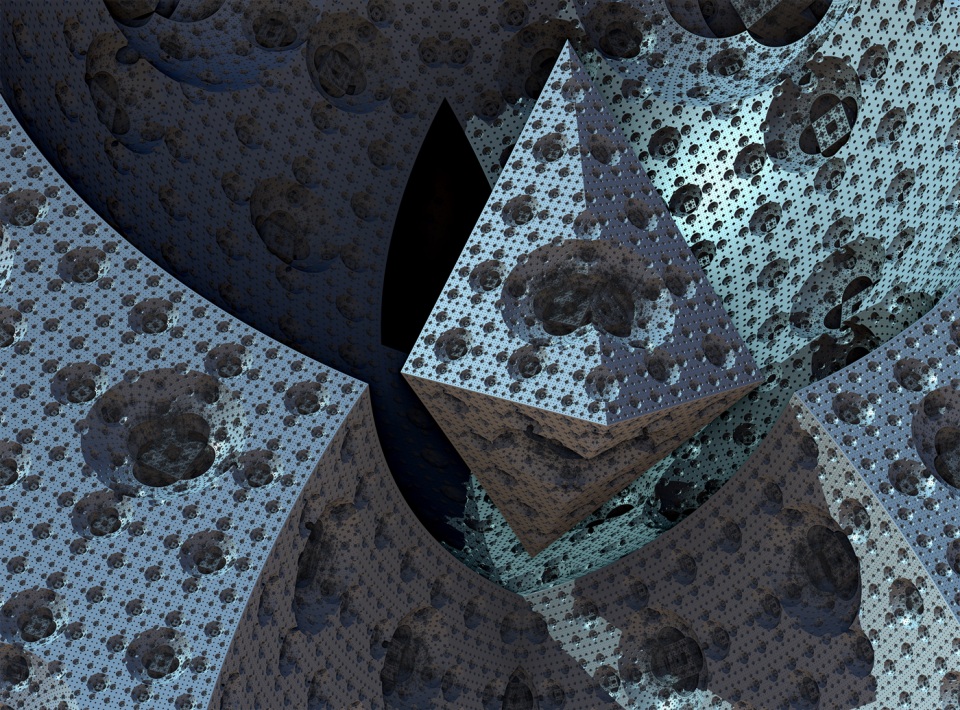

Like Gpucaster, Mandelbulb 3D provides the tools to render some really stunning scenes of mathematically generated, ray traced 3D geometry. Its feature set is significantly larger than Gpucaster but perhaps more importantly the accompanying UI, while still a little less than intuitive, remains approachable enough to yield a wide variety of genuinely creative results in a more reasonable amount of time. The images accompanying this post were created by myself in a single afternoon session of initial experiments.

![scratchPad #002 [detail]](http://inmosystems.com/wp-content/uploads/scratchPad002detail_01-960x624.jpg)

While many aspects of Mandelbulb 3D’s rendering environment will be familiar to those with a background in 3D graphics (lights, camera, etc), it’s the approach to geometry that differs significantly from the traditional modelling process. This isn’t a blog post about what fractals are, an infinitely fascinating subject in itself (pun intended) but if you have even the slightest interest in the nature of such things, I highly recommend some basic research. This series of four short articles is a great place to start [1] [2] [3] [4], the embedded videos at the end of each one in particular. Imaginary numbers and the complex plane turn out to be far less intimidating than they sound and with even a basic understanding of what’s happening, it really is hard to walk away without a renewed fascination for the natural world around you.

For those less inclined to dig into the math, one way to get some intuition of what’s happening is to look at the brief Wikipedia page on the Menger Sponge, specifically the first section titled Construction. Of key significance is the final part about iterating ad infinitum, where the output of previous iterations is passed as input to subsequent iterations, thus forging further self similar detail onto geometry at increasingly smaller scales. The greater the number of iterations, the higher the level of detail at the objects current scale. This is the essence of what you are seeing when ‘zooming through’ a fractal image, exploring what appears to be infinitely emerging self-similar patterns.

Thankfully, Mandelbulb 3D comes with a library of fractal formulas to use as starting points, allowing you to modify and combine up to six to iterate with simultaneously. With some careful experimentation you can begin to intuitively generate some obscenely complex geometry but attempting to fashion pre-conceived shapes of a specific arbitrary nature continues to feels quite elusive. Instead the process is a combination of searching and modifying; exploring the results of combined formulae, then mindfully adjusting their values to refine the output.

This is all a lot of fun and certainly results in engaging abstract imagery but the question remains, how can this process be incorporated into a larger, more directed creative pipeline? One significant feature of MB3D that I’m yet to mention is the ability to render video as Julius did in Foreign Nature. To plot a course and zoom deep into these worlds of my own making, in 360 degree panoramic video is certainly appealing. I suspect that with a little further investigation, elaborate static skybox environments can also be rendered for use in a game engine of choice but as is the case with the 360 degree video content, care must be taken to ensure the camera remains at a significant distance from all surfaces in order to maintain a relatively convincing result.

Julius has created a great video examining a similar question regarding wider usefulness. He’s primarily approaching the subject from the perspective of VFX as opposed to real-time 3D engines but does mention the possibility of generating high poly meshes from the resulting geometry, which theoretically could be used to generate normal maps. From a practical point of view I get the feeling there’s significant limitations here without a lot of re-ordering of other established steps in the pipeline. Nevertheless, I’m a huge fan of the aesthetic and as and when time allows, I certainly plan to return and continue exploring.

Roles: Designer, Developer, 3D Artist.